What happened, in plain English

On November 18, 2025, Microsoft and Nvidia said they’ll invest in Anthropic while striking a sweeping cloud-and-chips pact that puts Anthropic’s Claude models on Microsoft’s Azure AI Foundry. Anthropic, in turn, agreed to spend $30 billion on Azure compute and to contract up to 1 gigawatt of AI capacity built on Nvidia’s current Grace Blackwell systems and the next-generation Vera Rubin platform. Reuters confirmed Microsoft’s investment of up to $5 billion and Nvidia’s of up to $10 billion, alongside the Azure commitment and 1 GW target.

Why this alliance matters

- Claude lands on Azure. Microsoft will offer Anthropic’s latest Claude models through Azure AI Foundry, meaning enterprises can build with Claude alongside OpenAI, Cohere, xAI, Meta Llama and other options in the same governed platform. Trade press and Microsoft community posts say Foundry access will include current Claude family releases, expanding model choice for developers already standardized on Azure. Reuters, Microsoft Tech Community, CRN, GeekWire.

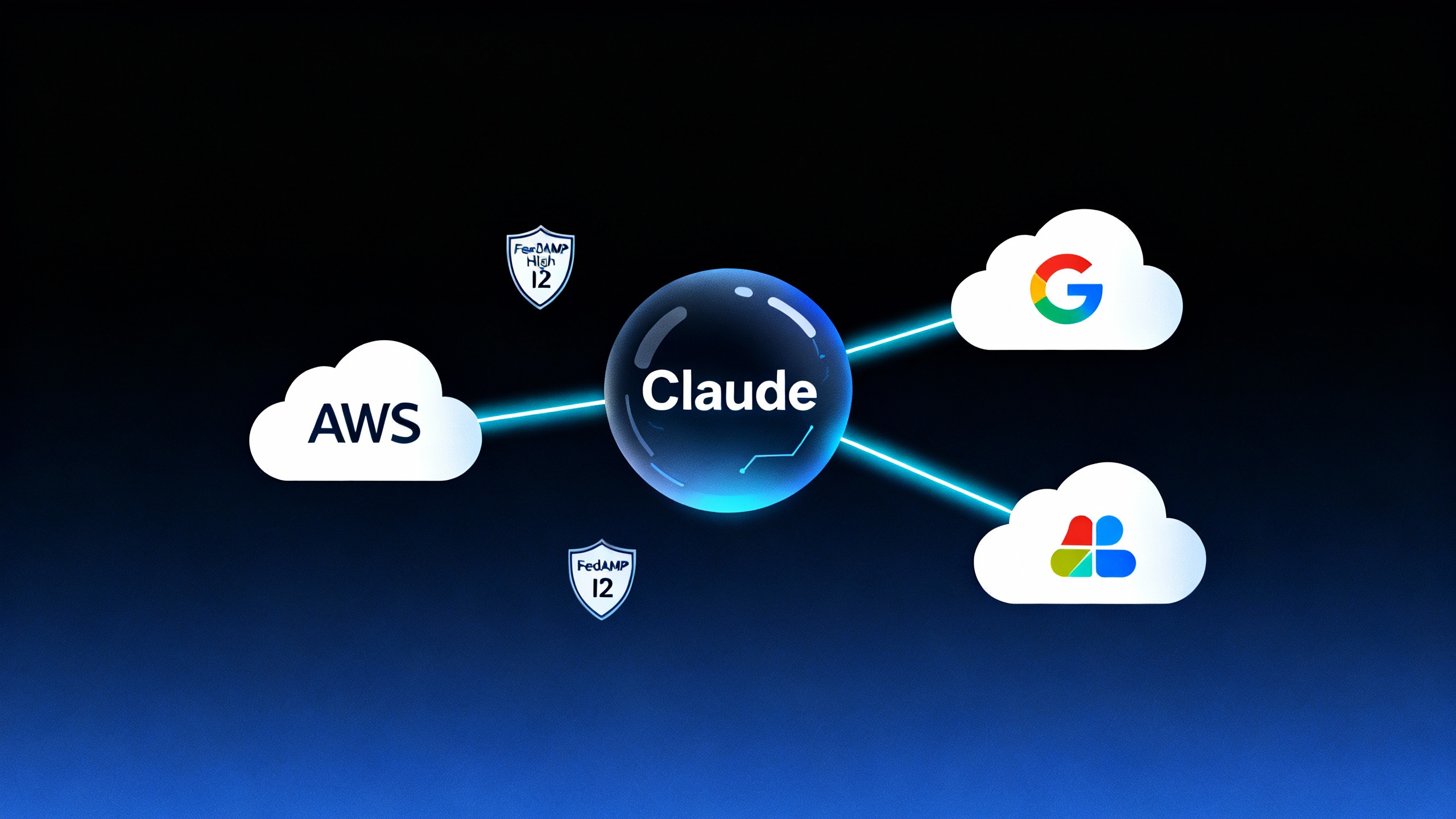

- Claude becomes truly multicloud. Claude is already on AWS via Amazon Bedrock and on Google Cloud’s Vertex AI (including FedRAMP High and IL2 authorizations). With Azure in the mix, Claude is now the rare “frontier” model available across all three major public clouds. Anthropic on Bedrock, Anthropic on Vertex AI, Reuters.

- Nvidia deepens ties with a top model lab. Nvidia will invest up to $10B in Anthropic and co-engineer performance and efficiency tuning for Anthropic’s future models on Blackwell and the forthcoming Vera Rubin architecture, aligning future GPUs to Claude’s workloads. Reuters, TechCrunch on Rubin, CNBC on Rubin timeline.

- Microsoft hedges. After renegotiating its OpenAI partnership this fall, Microsoft is widening its bench of frontier models. At the same time, Reuters notes Amazon remains Anthropic’s primary cloud and training partner, highlighting Anthropic’s multi-cloud stance. Microsoft blog on OpenAI evolution, Reuters, Amazon–Anthropic update.

The dollars, chips, and distribution — at a glance

Who’s committing what

| Player | Money in Anthropic | Compute/Capacity | Distribution impact |

|---|---|---|---|

| Microsoft | Up to $5B equity | $30B Azure spend by Anthropic; Azure Foundry access for Claude | Claude joins Azure AI Foundry; integration across Copilot stack signaled |

| Nvidia | Up to $10B equity | Co-design and 1 GW initial capacity on Grace Blackwell → Vera Rubin | Optimized Claude training/inference on Nvidia roadmaps |

| Anthropic | — | Commits to Azure and to 1 GW initial Nvidia capacity | Claude available across AWS Bedrock, Google Vertex AI, and now Azure Foundry |

Sources: Reuters, CRN, GeekWire.

What changes for builders and IT leaders

- Faster procurement, more optionality. If your estate runs on Azure, you can now standardize procurement, governance, security, and observability for Claude alongside OpenAI and other models in Foundry. That reduces vendor sprawl while preserving multi-model flexibility. Microsoft Tech Community.

- Multicloud portability. Being able to run Claude on AWS Bedrock, Google Vertex AI (with FedRAMP High/IL2), and Azure Foundry lowers switching costs between clouds and helps regulated teams align the same model to different compliance baselines. Anthropic on Vertex AI, Anthropic on Bedrock.

- Roadmap headroom. The 1 GW compute plan (industry execs estimate $20–$25B of hardware at that scale) and Nvidia’s Rubin timeline suggest headroom for long-context, agentic, and tool-using workloads that are compute hungry. Reuters, CNBC.

TipHow to try Claude on Azure

- In Azure AI Foundry, open the model catalog and select a Claude model to deploy as a managed endpoint.

- Start with retrieval and document understanding benchmarks to compare Claude vs. your current default.

- Use Foundry’s model router to A/B route prompts across models for latency, quality, and cost before you commit.

- For regulated workloads, map the same prompt chains to Bedrock/Vertex AI regions to validate portability and controls.

Strategy: circular spending or smart capacity insurance?

The deal fits an industry pattern of “circular” AI spending in which cloud platforms and chipmakers invest in model labs that then buy back compute capacity and hardware. Analysts say the approach raises bubble risks, but also secures scarce GPUs and power for multi‑year roadmaps. Reuters analysis, Business Insider, Ars Technica.

From Microsoft’s vantage point, adding Anthropic reduces single‑model concentration risk as OpenAI diversifies onto AWS under a new seven‑year, $38B agreement. Nvidia, meanwhile, seeds future demand for Rubin-era systems by co‑designing with a top model lab. Reuters on OpenAI–AWS.

The compute and infrastructure race, by the numbers

- 1 GW initial capacity earmarked for Anthropic’s workloads on Nvidia systems; industry estimates peg 1 GW of AI computing at roughly $20–$25B of hardware investment. Reuters.

- Nvidia’s Vera Rubin platform is slated to arrive in 2026, following Blackwell Ultra, keeping Nvidia on an annual cadence that directly benefits large‑scale reasoning and agentic tasks. CNBC, TechCrunch.

- Anthropic also announced a $50B plan to expand U.S. data centers with partner Fluidstack, echoing the scale of the broader AI infrastructure buildout. Anthropic, AP coverage, The Guardian.

What to watch next

- Product SKUs and SLAs. Microsoft still needs to publish the precise Foundry SKUs, regions, and rate limits for Claude; early signals point to deep integration with the Copilot family and the model router. GeekWire, Microsoft Tech Community.

- Regulatory scrutiny. U.S., U.K., and EU authorities have been probing Big Tech’s AI tie‑ups; multibillion‑dollar equity‑plus‑compute deals will likely draw fresh attention. For now, regulators have allowed several partnerships to proceed, but they’re watching consolidation closely. AP on FTC inquiry, CMA/UK coverage, Reuters on CMA–OpenAI.

- Power and sustainability. The industry’s compute targets (measured in gigawatts) will intensify debates over power procurement, grid impact, and water use. Expect buyers to demand increasingly transparent carbon and energy metrics alongside performance. Reuters.

Bottom line for automation and productivity leaders

If you’re already invested in Azure, Claude’s arrival reduces friction to test and adopt a second frontier model without retooling your security, billing, and governance patterns. Given the size of the compute commitment and Nvidia’s co‑engineering, you should plan for faster-moving Claude roadmaps—especially in long‑context reasoning and agentic workflows that help automate research, coding, and complex back‑office processes.

Near term, start with side‑by‑side evaluations on your real tasks (summarization, code review, multi‑doc analysis, policy compliance checks). Then use Foundry’s routing and cost controls to keep quality high while containing spend. In a year defined by model choice, this deal gives you one more lever to turn ambition into measurable productivity gains.

Sources

- Reuters: Microsoft, Nvidia to invest; Anthropic commits $30B to Azure; 1 GW capacity, multicloud context. Link

- Microsoft Tech Community: Foundry models at Ignite; Claude available to Azure customers. Link

- CRN: Deal details and Nvidia co‑engineering language. Link

- GeekWire: Investment breakdown; product access notes. Link

- Anthropic: Claude on Bedrock; Claude on Vertex AI (FedRAMP High/IL2); $50B U.S. infrastructure plan. Link | Link | Link

- CNBC/TechCrunch: Nvidia Rubin timeline. Link | Link

- AP/Guardian: Infrastructure expansion context. AP | Guardian