What happened

Nvidia has entered a non‑exclusive agreement to license Groq’s AI inference technology and is hiring Groq founder and CEO Jonathan Ross along with President Sunny Madra and other team members. Groq says it remains an independent company under new CEO Simon Edwards, and its GroqCloud service will continue uninterrupted (Groq newsroom). Multiple outlets initially framed the arrangement as a $20 billion acquisition of assets; Nvidia and Groq characterize it as license‑and‑hire, with financial terms undisclosed (CNBC report via archive; TechCrunch; Reuters). A spokesperson statement reported by PYMNTS reiterates: “We haven’t acquired Groq. We’ve taken a non‑exclusive license … and have hired engineering talent” (PYMNTS).

Deal at a glance

| Item | What’s known |

|---|---|

| Structure | Non‑exclusive technology licensing + key hires |

| Who’s moving | Jonathan Ross (founder/CEO), Sunny Madra (president), other Groq engineers |

| Groq’s status | Continues operating independently; Simon Edwards becomes CEO; GroqCloud continues |

| Price tag | Not disclosed by the companies; media reports widely cite “about $20B” for assets/licensing |

| Strategic aim | Strengthen Nvidia’s position in AI inference (low‑latency, cost‑efficient deployment) |

Why inference is the new battleground

Nvidia dominates the training of large AI models, but the industry’s bottleneck (and spend) is shifting to inference—the real‑time, user‑facing side of AI. Analyst coverage this week underlines that the deal squarely targets that pivot (Axios; Barron’s).

Gartner projects that, as soon as 2026, more than half of AI‑optimized IaaS spending will be for inference rather than training, underscoring why hyperscalers and chip leaders are refocusing on deployment costs and latency (Gartner).

This “license‑and‑hire” structure also fits a broader pattern in big‑tech AI deals aimed at accelerating roadmaps without tripping merger alarms—see Microsoft’s $650M licensing arrangement with Inflection AI alongside hiring its co‑founders, and Amazon’s licensing deal plus key hires from Adept AI (Reuters on Microsoft–Inflection; TechCrunch on Amazon–Adept). Regulators have taken note, even when they ultimately decline formal merger scrutiny (EU decision on Microsoft–Inflection).

What Groq brings: a purpose‑built path to low‑latency inference

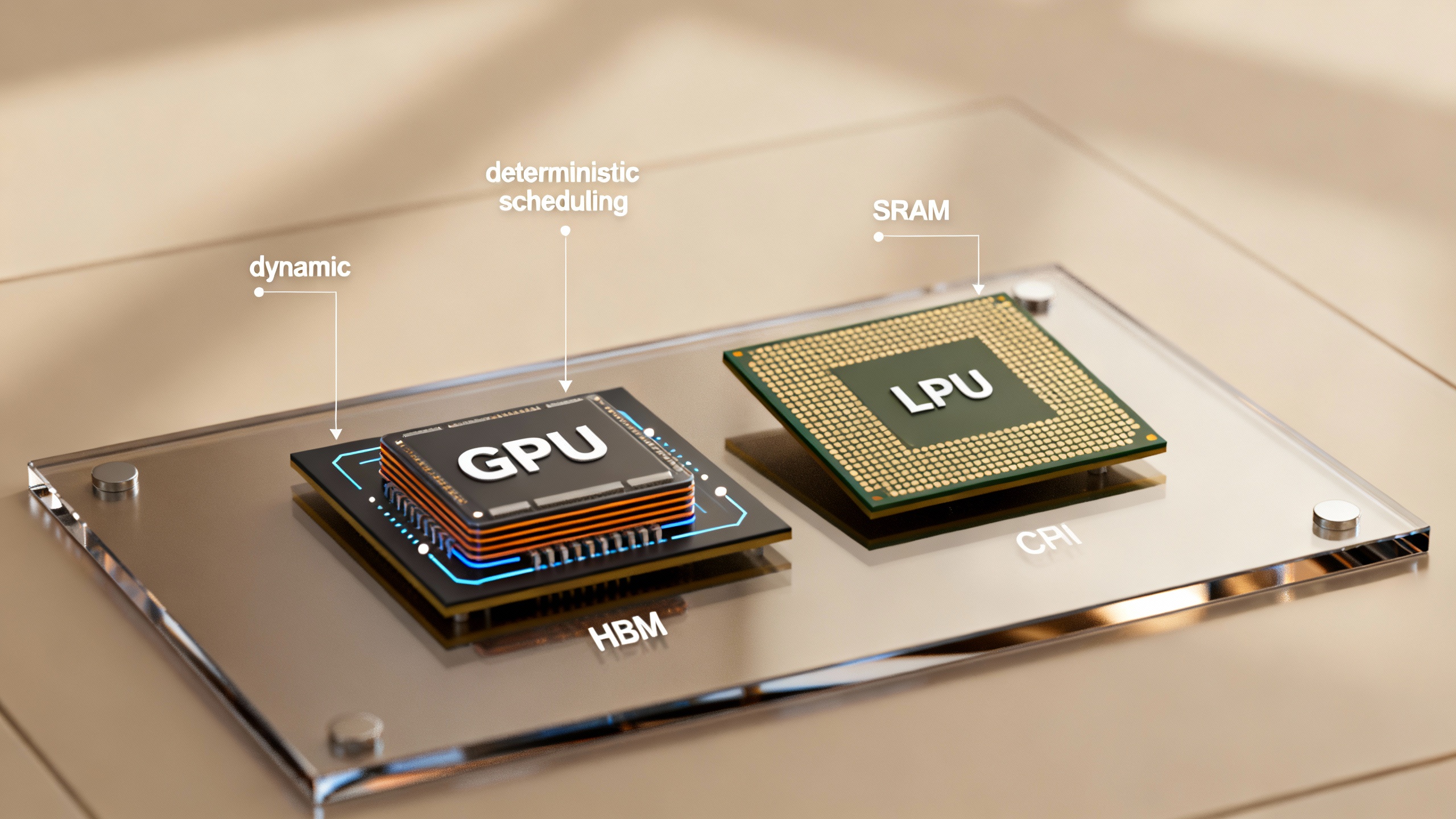

Groq’s Language Processing Unit (LPU) architecture was designed from the ground up for inference, not repurposed from training‑first GPUs. Three ideas matter for practitioners:

- On‑chip SRAM as primary weight storage: Groq packs hundreds of MB of SRAM on die, cutting fetch latency compared with HBM/DRAM hierarchies common in GPU systems (Groq LPU overview; Groq technical blog).

- Deterministic, statically scheduled execution: A compiler pre‑schedules compute and inter‑chip communication down to the cycle, minimizing queuing and tail latency that can plague dynamic GPU scheduling (Groq technical blog).

- Throughput with quality: Groq emphasizes “TruePoint” numerics to speed inference while preserving accuracy, rather than relying on extreme quantization (Groq technical blog).

In public benchmark write‑ups and demos, these design choices translate into consistent time‑to‑first‑token and high sustained token rates for popular LLMs (Groq benchmark post).

Why this helps Nvidia—and what to watch technically

Nvidia gains two things at once: (1) access to Groq’s inference IP under a non‑exclusive license, and (2) the leadership and engineers who designed it. Reporting indicates Nvidia intends to integrate the technology into its “AI factory” architecture to broaden real‑time inference capabilities (Axios; EE Times Asia). A spokesperson also clarified the structure—license plus hires, not an acquisition (PYMNTS).

What we’ll be watching:

- Hybrid stacks: How Nvidia combines GPUs (for training and high‑throughput inference) with LPU‑style deterministic, low‑latency paths for interactive agents and real‑time apps.

- Memory pressure: HBM remains tight across the industry; SRAM‑heavy LPU designs approach this differently, potentially easing certain latency constraints (Business Standard on HBM tightness).

- Software surface area: Where Groq ideas show up—CUDA/TensorRT‑LLM integrations, deployment blueprints, or new microservices—will signal how quickly developers can adopt them.

What changes (and doesn’t) for Groq customers

Groq says GroqCloud will continue to run as before. Leadership transitions to Simon Edwards as CEO, while joining Nvidia are Ross, Madra, and others who will “help advance and scale the licensed technology” (Groq newsroom). TechCrunch notes Groq’s rapid developer traction this year, as the company positioned LPU inference for real‑time use cases (TechCrunch).

For current GroqCloud users, the near‑term message is continuity. For Nvidia customers, expect new inference options to surface as the licensed technology is integrated and productized.

Market take: a strong signal with open questions

Markets read this as Nvidia fortifying the part of AI that’s poised to scale fastest—inference—while neutralizing a potential rival by bringing its founder and core team inside. Analysts praised the strategic logic but questioned rumored price tags relative to Groq’s scale; the companies have not confirmed a figure (Barron’s; Reuters). Either way, it shows Nvidia’s willingness to use its balance sheet to deliver choice (GPUs plus specialized inference silicon) as customers eye total cost per model response, not just training time.

For builders: how to make this actionable

- Re‑baseline your inference SLOs. If your app lives or dies by latency variability (voice agents, trading copilot, industrial control), track whether Nvidia’s platform begins offering deterministic paths informed by LPU‑style execution.

- Model‑to‑hardware mapping. Build a small decision matrix that matches workloads (short‑context chat, long‑context RAG, batch analytics) to target runtimes (GPU, LPU‑like, CPU+accelerator). Keep an eye on power budgets and per‑million‑token costs.

- Hedge for portability. Favor deployment stacks that let you target multiple backends as GPU and LPU options evolve. This is the moment to reduce lock‑in risk.

Sources

- Groq newsroom: Groq and Nvidia enter non‑exclusive inference technology licensing agreement

- Reuters: Nvidia, joining Big Tech deal spree, to license Groq technology, hire executives

- CNBC (archived): Exclusive: Nvidia buying AI chip startup Groq for about $20 billion

- TechCrunch: Nvidia to license AI chip challenger Groq’s tech and hire its CEO

- Axios: Nvidia deal shows why inference is AI’s next battleground

- Barron’s: Nvidia stock slips. Why its Groq deal raises questions.

- PYMNTS: Nvidia acquires tech and talent from inference chip maker Groq (spokesperson quote clarifying structure)

- Gartner: AI‑Optimized IaaS poised for growth; inference to reach 55% of spend in 2026

- Groq technical background: Inside the LPU: Deconstructing Groq’s Speed, LPU architecture overview, Groq benchmark notes

- Industry context: HBM supply tightness impacts AI hardware (Business Standard)