The 10‑Second Take

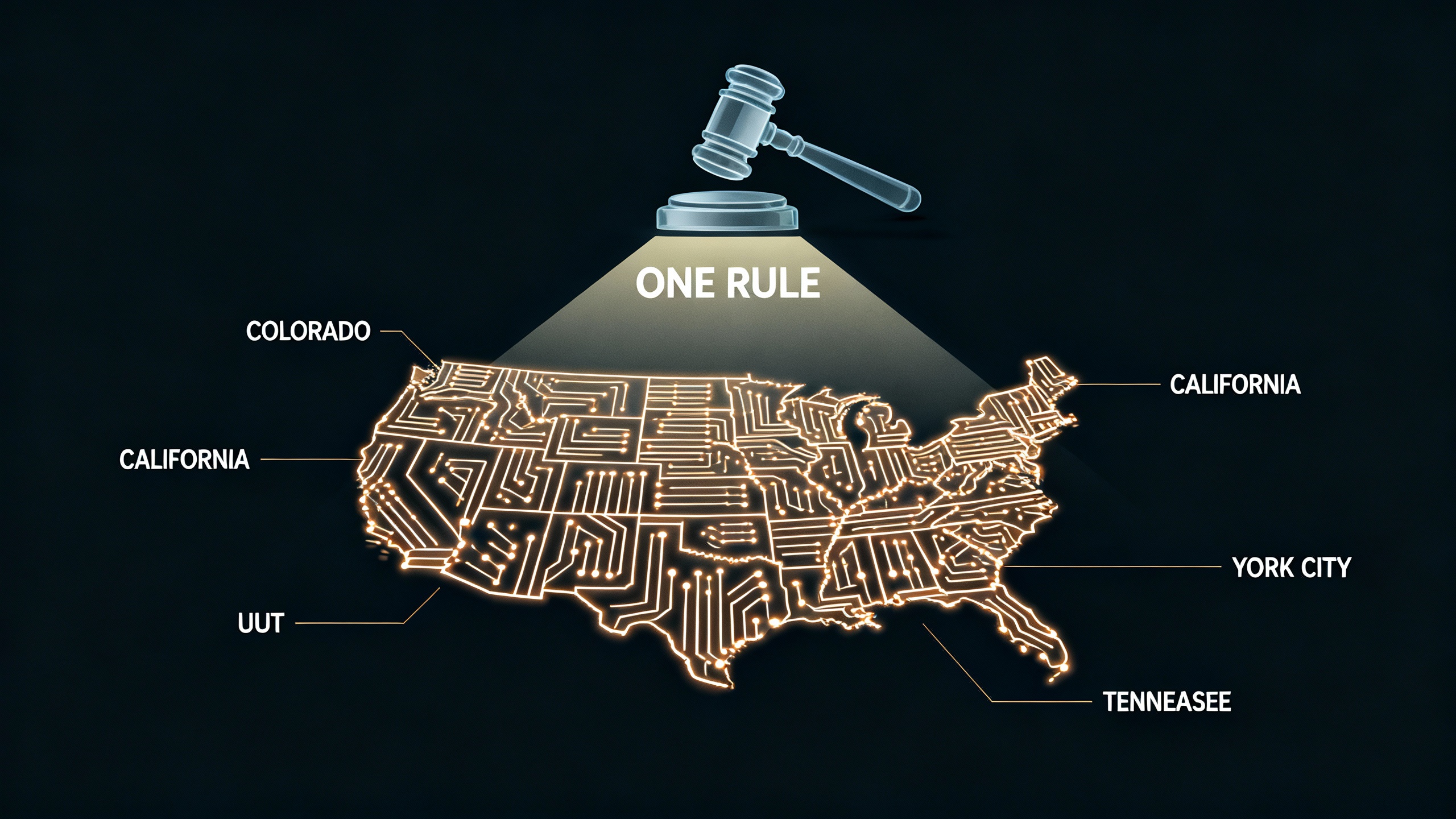

President Trump says he’ll sign a “ONE RULE” executive order this week to create a single national approach to AI—aimed at stopping a 50‑state patchwork before it hardens. Reports suggest the order would direct federal agencies to challenge or sideline state AI rules using litigation, funding conditions, and new federal standards. For builders, this could simplify compliance over time—but nothing changes overnight, and any preemption fight will be messy, litigated, and uneven across jurisdictions. Reuters, Financial Times.

What’s reportedly in the “ONE RULE” EO

Multiple outlets describe a draft order that would push toward a single national standard and directly target state AI laws. While final text isn’t public yet, consistent elements across reports include:

- A DOJ “AI Litigation Task Force” to challenge state AI laws as barriers to interstate commerce or conflicting with federal policy. POLITICO, Nextgov/FCW.

- Conditioning certain federal grants (e.g., some broadband dollars) on states’ alignment with federal AI policy. Axios, Nextgov/FCW.

- A request for the FCC to consider a federal reporting/disclosure standard for AI systems that would preempt conflicting state requirements, and an FTC policy statement clarifying how existing consumer‑protection rules apply to AI. Axios.

This EO would build on a broader shift since January 2025, when Trump revoked key parts of the prior administration’s AI order and directed a more “pro‑innovation” posture, culminating in the White House’s July “America’s AI Action Plan.” White House EO, Jan. 23, 2025; AI Action Plan, July 23, 2025.

How far could an EO go—legally?

- It can direct agencies to sue states. DOJ could challenge specific laws as unconstitutional (e.g., violating the Commerce Clause) or conflicting with federal policy. But those suits would be case‑by‑case, slow, and far from guaranteed.

- It can condition federal funding. The order could ask agencies to tie eligibility for certain grants to a state’s AI policies. The Supreme Court allows some conditional funding (South Dakota v. Dole) but strikes down coercive schemes (NFIB v. Sebelius). Expect litigation over where that line falls. LII Dole, NFIB v. Sebelius.

- It can ask agencies to set national standards. If FCC/FTC/Commerce promulgate rules within statutory authority, those rules may preempt conflicting state provisions. But agencies must show Congress actually delegated that power. Courts recently pushed back on aggressive preemption without clear authority (e.g., the Ninth Circuit upholding California’s net‑neutrality law when the FCC lacked the power it claimed). CRS on ACA Connects v. Bonta, Justia case summary.

- It cannot commandeer states. The federal government can’t force state officials to administer federal policy (anti‑commandeering doctrine). Any attempt to make states enforce federal AI rules would likely fail. LII CONAN on anti‑commandeering.

Bottom line: the EO can start a preemption campaign, but courts will decide the contours—and that will take months to years.

Which state and local AI rules are “on the bubble”?

State and local AI/automation rules builders are watching

| Jurisdiction | Rule | What it does | Status/timing |

|---|---|---|---|

| Colorado | SB 24‑205 (Colorado AI Act) | “Reasonable care” duties for developers/deployers of high‑risk AI; notice, risk management, and impact obligations | Effective date delayed to June 30, 2026. Amendments anticipated in 2026 session. Colo. GA summary, GT Law insight |

| California | CPPA ADMT Regulations | Automated decisionmaking rules under CCPA: risk assessments, disclosures, opt‑outs in defined contexts | Approved Sept. 23, 2025. Implementation calendars vary by provision. CPPA 9/23/25 |

| NYC | Local Law 144 (AEDT) | Bias audits and notices for AI hiring tools | In effect; audit and notice requirements ongoing. NYC DCWP |

| Utah | SB 149 (2024) | Consumer‑facing AI disclosures; AI Office; UDAP enforcement | In force since May 1, 2024. GT Law, Orrick |

| Tennessee | ELVIS Act (2024) | Voice/likeness protection against AI impersonation for creators | In force since July 1, 2024. AP, Tennessee Gov. |

Many states also restrict election deepfakes (e.g., Minnesota, Texas, Washington), with active First Amendment litigation testing the bounds. Minnesota statute, AP on X lawsuit, UW CIP on WA law, Texas code.

The politics: industry unity vs. state pushback

The White House and allied lawmakers have floated preemption for months, but momentum in Congress has been choppy. A recent Axios report described a stall in straightforward federal preemption language, and state attorneys general from both parties have urged Washington not to nullify state AI laws. Expect similar resistance to a preemption‑oriented EO. Axios, Reuters, TN AG release, NCSL letter, CA AG release.

What it means for builders (right now)

- Keep shipping—but don’t tear out your state compliance toggles yet. Until courts or agencies actually preempt specific provisions, state rules remain enforceable. That includes Colorado’s 2026 obligations (now delayed but very much alive) and California’s ADMT rules. Colo. GA, CPPA.

- Expect more federal “signals” than mandates in the near term. Look for FTC policy guidance and sector‑specific standards, and for the FCC to at least open proceedings on disclosures. Those may shape expectations before they carry preemptive force. Axios.

- Design to the center of gravity: NIST/AISI. Even under a deregulatory banner, the White House has pointed to NIST/AISI artifacts as the neutral backbone for risk management and evaluations. Using them buys future‑proofing whichever way the legal winds blow. NIST/AISI guidance, NIST 2025 updates.

A practical 30/60/90 for product, legal, and security

Tip60 days: Normalize to a federal spine

- Where feasible, standardize notices, user controls, and vendor diligence at the “strictest common denominator” across your largest markets—even if the EO ultimately narrows requirements.

- Prep a public‑facing AI transparency page (system cards, safety eval summaries, points of contact). It plays well with regulators at all levels and shortens procurement cycles.

- For hiring tools, assume AEDT‑style audit discipline applies nationally and tighten vendor contracts accordingly.

Key unknowns builders should track

- Scope: Does the final EO target only “AI‑specific” laws, or also state privacy/consumer rules when applied to AI? California’s ADMT rules sit under privacy rather than stand‑alone AI law—harder to preempt without congressional action. CPPA.

- Authority: Which statutes do agencies cite to claim preemptive power? Courts look for clear delegation. The net‑neutrality cases illustrate the peril of preemption without it. CRS on ACA Connects.

- First Amendment: Some state AI restrictions (especially around political speech) face free‑speech challenges irrespective of preemption—and those cases could reshape obligations for content and ads platforms. Reuters on X lawsuit.

The bottom line

If signed as previewed, the “ONE RULE” EO would kick off a federal campaign to corral state AI regulation. That could relieve builders of duplicative, divergent rules over time. But preemption here isn’t a light switch; it’s a long corridor of agency actions and lawsuits. Build to a stable center (NIST/AISI), keep state‑level controls in place, and architect your compliance so it can flex as the legal map shifts.

Sources

- Reuters: Trump says he will sign executive order this week on AI approval process.

- Financial Times: Trump to issue executive order for single federal rule on AI regulation.

- POLITICO: White House prepares executive order to block state AI laws.

- Axios: Trump’s push to overrule state AI regulations stalls and Draft EO details on FCC/FTC roles.

- Nextgov/FCW: White House considers order to preempt state AI laws.

- White House: Removing Barriers to American Leadership in AI (EO, Jan. 23, 2025); America’s AI Action Plan.

- NIST/US AISI: Updated guidelines on managing misuse risk for dual‑use foundation models; NIST 2025 updates.

- Colorado: SB25B‑004 delaying AI Act to June 30, 2026; Greenberg Traurig summary.

- California: CPPA finalizes ADMT and related regulations (Sept. 23, 2025).

- NYC AEDT: Local Law 144 guidance.

- State AG opposition: Reuters; TN AG press release with bipartisan letter; NCSL letter; CA AG release.

- Preemption/federalism: CRS Primer; CRS Sidebar; EO 13132 (Federalism); LII on preemption; Dole; NFIB v. Sebelius; ACA Connects v. Bonta.