The short version

The White House has cleared Nvidia to export its H200 AI accelerators to “approved customers” in China — but only under Commerce Department vetting and with a 25% surcharge flowing to the U.S. Treasury. Nvidia’s newer Blackwell chips (B200/GB200) remain off-limits. Beijing, meanwhile, is signaling it may limit access on its side. The net effect: some near‑term easing for supply chains and Chinese AI builders, without erasing the generational gap or the compliance risk.

What changed on December 8–9, 2025

- President Donald Trump said the U.S. will allow Nvidia to sell H200 GPUs to China with a 25% fee on those sales, and that similar permissions could apply to AMD and Intel under Commerce oversight. Reuters, AP, Politico, Semafor.

- China may restrict access on its end, with regulators reportedly preparing to limit how widely H200s can be deployed. Reuters summarizing FT.

- The Justice Department simultaneously highlighted smuggling risks, charging individuals over illicit attempts to ship H100/H200s into China — a reminder that gray markets have flourished during the ban era. Reuters.

Why H200 matters (and what’s still fenced off)

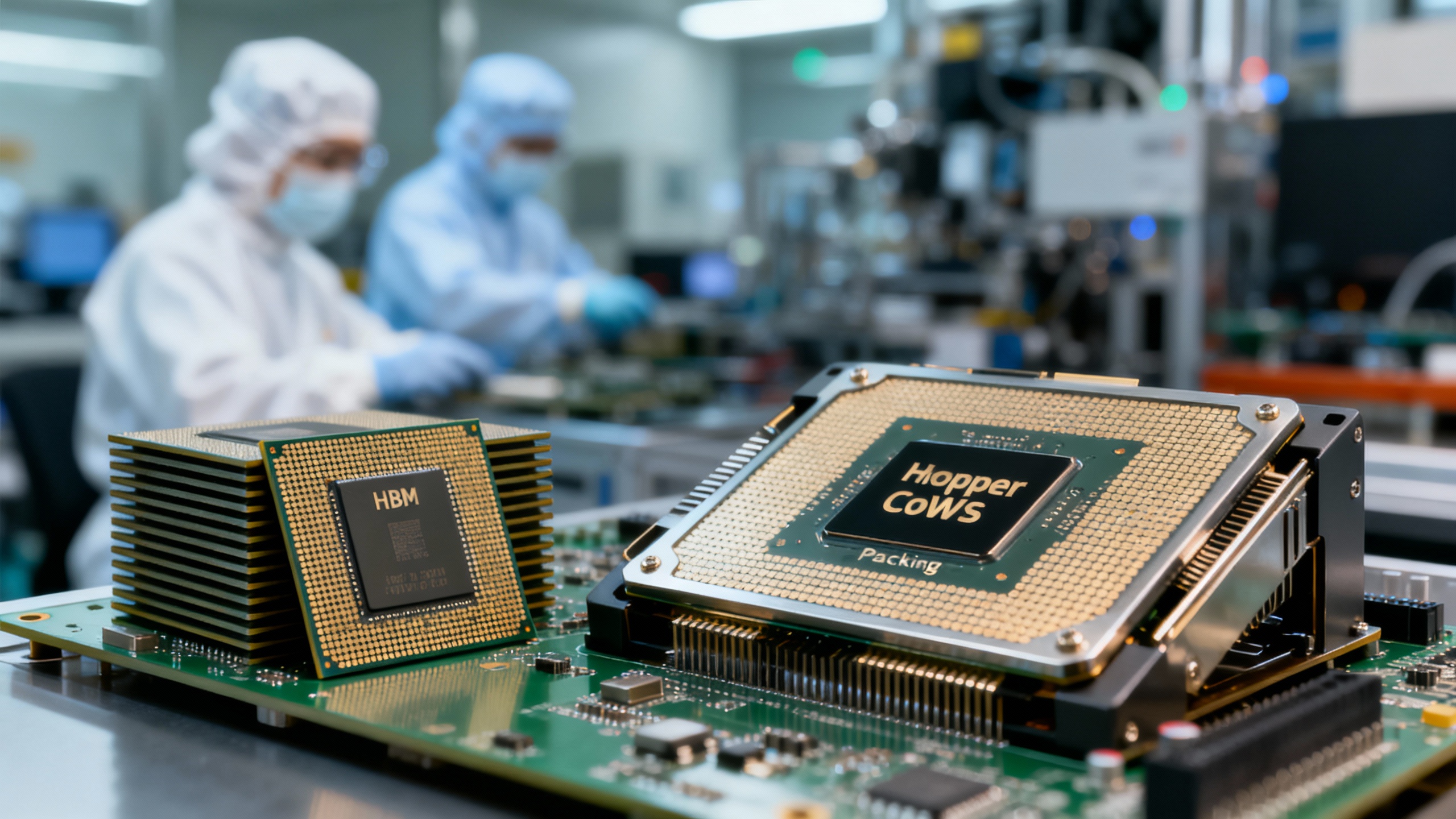

H200 is Nvidia’s top Hopper‑generation GPU with 141 GB of HBM3e memory and 4.8 TB/s bandwidth — a big step up from H100 on memory‑bound AI workloads. Nvidia press release, Product page, Lenovo/partner specs, Tom’s Hardware.

However, Nvidia’s newer Blackwell parts (B200/GB200) boast far more bandwidth and support lower precisions (FP4/FP6), enabling much larger models and faster inference. Those remain barred for China. Tom’s Hardware, Civo explainer.

Semafor reports the allowance aims to permit GPUs roughly “a generation and a half” behind Nvidia’s cutting edge, i.e., well short of Blackwell. Semafor, TechCrunch.

The 25% levy: green light or legal gray area?

- The administration framed the 25% as a surcharge or revenue share attached to licensed exports. Politico, Reuters, Euronews.

- Lawyers note that the U.S. Constitution’s Export Clause bars federal taxes on exports, raising questions about how a 25% take is structured in law and regulation. Earlier in 2025, the White House acknowledged it was still “ironing out” the mechanics of a 15% arrangement tied to China‑compliant H20 sales. CNBC. For background on the Export Clause, see LII and Congress.gov summaries. LII, Congress.gov.

- Expect congressional scrutiny: Senator Elizabeth Warren has already criticized the decision and sought hearings. U.S. Senate release, earlier letter.

Supply chain reality check

Where H200s come from

- Foundry and packaging: Hopper‑generation GPUs (H100/H200) are built at TSMC (custom 4N process) and use advanced CoWoS packaging; capacity has been the persistent bottleneck. The Register, TrendForce via BusinessWire, TrendForce update.

- Memory: H200 relies on HBM3e supplied primarily by SK hynix and Micron, with Samsung ramping. Micron confirmed its HBM3e is designed into Hopper systems and began volume shipments aligned with H200 rollouts in 2024. Micron, Reuters on Samsung HBM3 for H20.

Will China actually get volume?

- China has recently tightened its own checks and discouraged purchases of some Nvidia parts, while FT reporting suggests regulators could limit access even if U.S. licenses flow. Reuters/FT, CNBC.

- TSMC’s packaging lines are heavily booked into 2025–2026 by Nvidia’s next‑gen products; capacity growth helps, but Blackwell demand consumes most of the slack. TrendForce news, TechPowerUp.

Bottom line: Some H200s should flow, but not a flood — and Chinese policy may keep deployments selective.

Winners, losers, and second‑order effects

Who is affected and how

| Stakeholder | What changes now | Near‑term impact | Risks/unknowns |

|---|---|---|---|

| Nvidia | Can resume H200 sales to approved Chinese customers with a 25% fee | Monetizes inventory; sustains CUDA mindshare in China | Levy mechanics; Chinese adoption limits; future rule reversals |

| Chinese AI labs & cloud | Access to top‑tier Hopper memory bandwidth, still behind Blackwell | Faster LLM training/inference vs. H20‑class; less reliance on gray markets | Regulatory whiplash; higher TCO with 25% surcharge; selective approvals |

| HBM suppliers (SK hynix, Micron, Samsung) | Incremental demand tied to H200 | Tight supply persists; pricing power supported | Validation/yield on newer HBM3e stacks; geopolitics |

| TSMC & OSATs | More Hopper packaging in parallel with Blackwell ramps | CoWoS lines stay full; revenue mix strong | Capacity prioritization, especially for Blackwell |

| U.S. policymakers | A transactional middle path vs. blanket bans | Curtails smuggling; captures revenue | Export‑tax legal challenges; national‑security pushback |

| Chinese chipmakers (Huawei Ascend, CXMT HBM plans) | Slightly less pressure if H200s arrive | Breathing room to mature local stacks | Domestic‑preference policies may still trump imports |

How this changes AI roadmaps (and what it doesn’t)

- Performance tiering remains: Chinese buyers may step up from H20‑class to H200, but U.S./allied markets stay on Blackwell and then Rubin — widening capabilities and efficiency over time. Tom’s Hardware.

- Software gravity still favors Nvidia: Even with constraints, CUDA’s ecosystem advantage keeps developers anchored unless Beijing mandates migration. Reuters on China vs H200.

- China will keep building at home: Memory maker CXMT is pushing toward HBM3 by 2026, highlighting a longer‑term import substitution path that doesn’t solve today’s shortages. Reuters.

Compliance and procurement: practical guidance

The backdrop: how we got here

Since 2022, the U.S. has tightened controls on advanced compute and tools (October 2023 rules; further clarifications in 2024–2025), often with a presumption of denial for China and broad “foreign direct product” reach. BIS 2023 fact sheet, BIS April 2024 clarifications, Covington summary, Covington Jan 2025 update. Nvidia’s China revenue plunged, culminating in an April 2025 $5.5B charge tied to its China‑market H20 after licenses were pulled — part of the whiplash that set the stage for today’s “pay‑to‑export” compromise. CNBC.

What to watch next

- Commerce’s fine print: who qualifies as an “approved customer,” shipment auditing, and whether the 25% is structured as a fee rather than a tax.

- Beijing’s counter‑moves: procurement guidance to state‑linked firms, customs checks, or limits on public‑cloud use of H200s. CNBC, Reuters/FT.

- Supply chain choke points: CoWoS expansion pace, HBM3e yields, and how much Hopper capacity Nvidia reallocates to China versus Blackwell ramps. TrendForce, Micron.

- Legal challenges: whether Congress or courts test the Export Clause boundary on a de‑facto export toll. LII.

The takeaway

The H200 opening is not a reset. It’s a controlled valve: enough to reduce diversion and re‑engage (some) Chinese customers, not enough to erase the performance lead enjoyed by buyers of Blackwell‑class systems. For AI builders and IT buyers, the practical play is to budget for volatility, engineer for portability, and treat compliance as a first‑order design constraint — because in 2026, policy will still be part of your architecture.

Sources

- Announcement and policy framing: Reuters via Yahoo Finance, AP, Politico, Semafor 1, Semafor 2, TechCrunch.

- China response and gray‑market context: Reuters/FT, CNBC, Reuters DOJ case.

- H200/Blackwell capabilities: Nvidia press release, Nvidia H200 page, Lenovo, Tom’s Hardware H200, Tom’s Hardware Blackwell, Civo.

- Supply chain: The Register, BusinessWire/TrendForce, TrendForce 2024/2025, TechPowerUp, Micron HBM3e, Reuters on Samsung HBM3 for H20.

- Export controls background: BIS Dec 2024, BIS Apr 2024, Covington 2023, Covington Jan 2025, CNBC on Nvidia’s China exposure and H20 write‑down, Congress.gov CRS overview.