-

The short answer: what actually differs

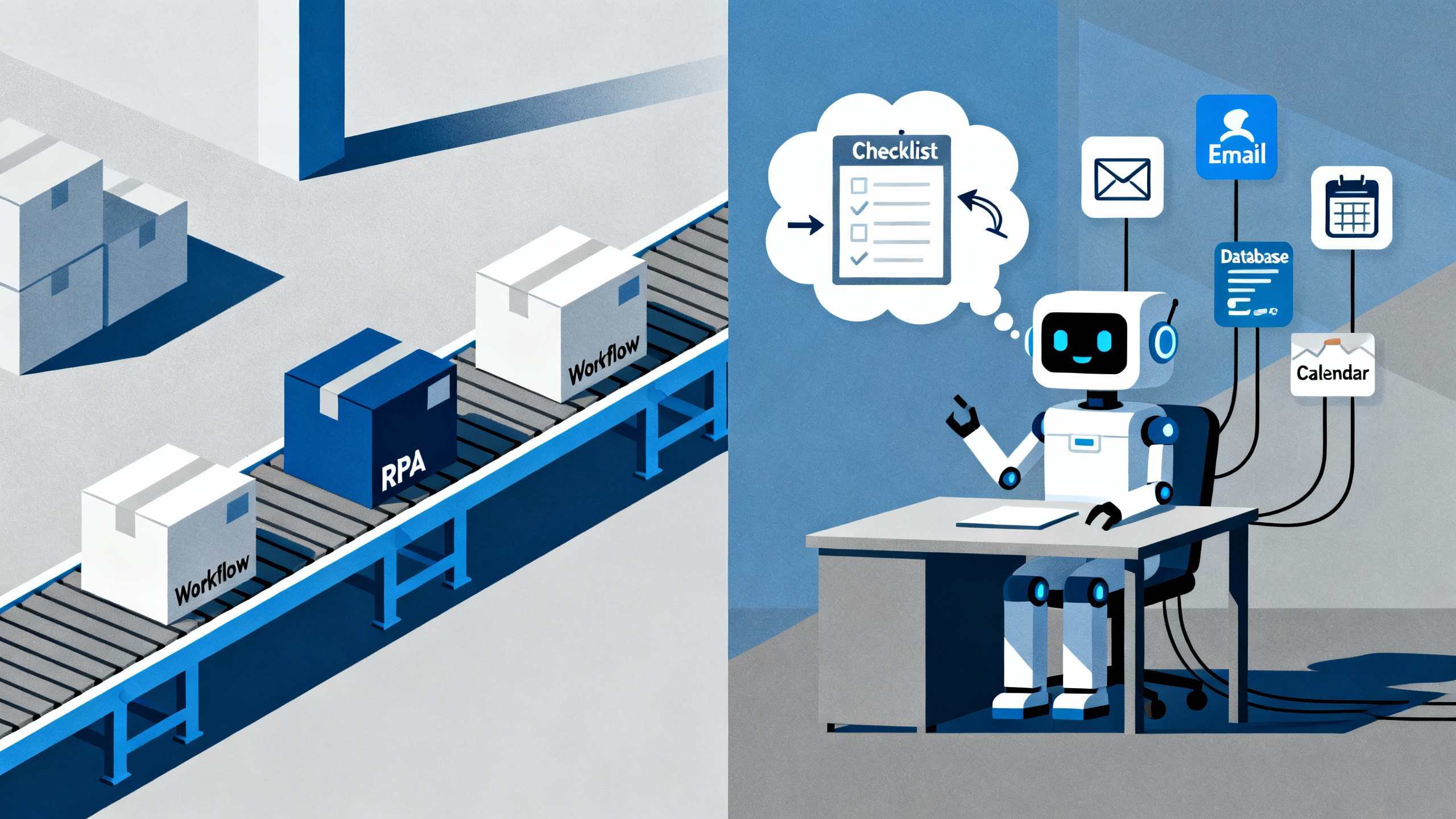

Think of traditional automation as a conveyor belt: precise, fast, and great at repeating a known sequence. You give it structured inputs and explicit rules, and it does the same thing every time.

Agentic AI is more like a capable assistant you brief with a goal. It perceives context, plans steps, chooses tools, acts, checks its work, and adapts. It can write emails, call APIs, reason across documents, and change course if the situation shifts.

- Automation: deterministic workflows and RPA executing predefined steps on structured data.

- Agentic AI: goal-driven systems (often powered by large language models) that plan, act with tools, and self-correct in a loop.

The result: automation thrives on certainty; agentic AI thrives on ambiguity. You will often use both. McKinsey notes that many jobs contain substantial pockets of activities suitable for automation, but not the whole job, reinforcing the need for mixed approaches (McKinsey Global Institute).

-

How they think: pipelines vs loops

Automation runs a pipeline: trigger, steps, done. If an exception appears that it was not programmed to handle, it stops or escalates. That is perfect when your process is stable and inputs are predictable.

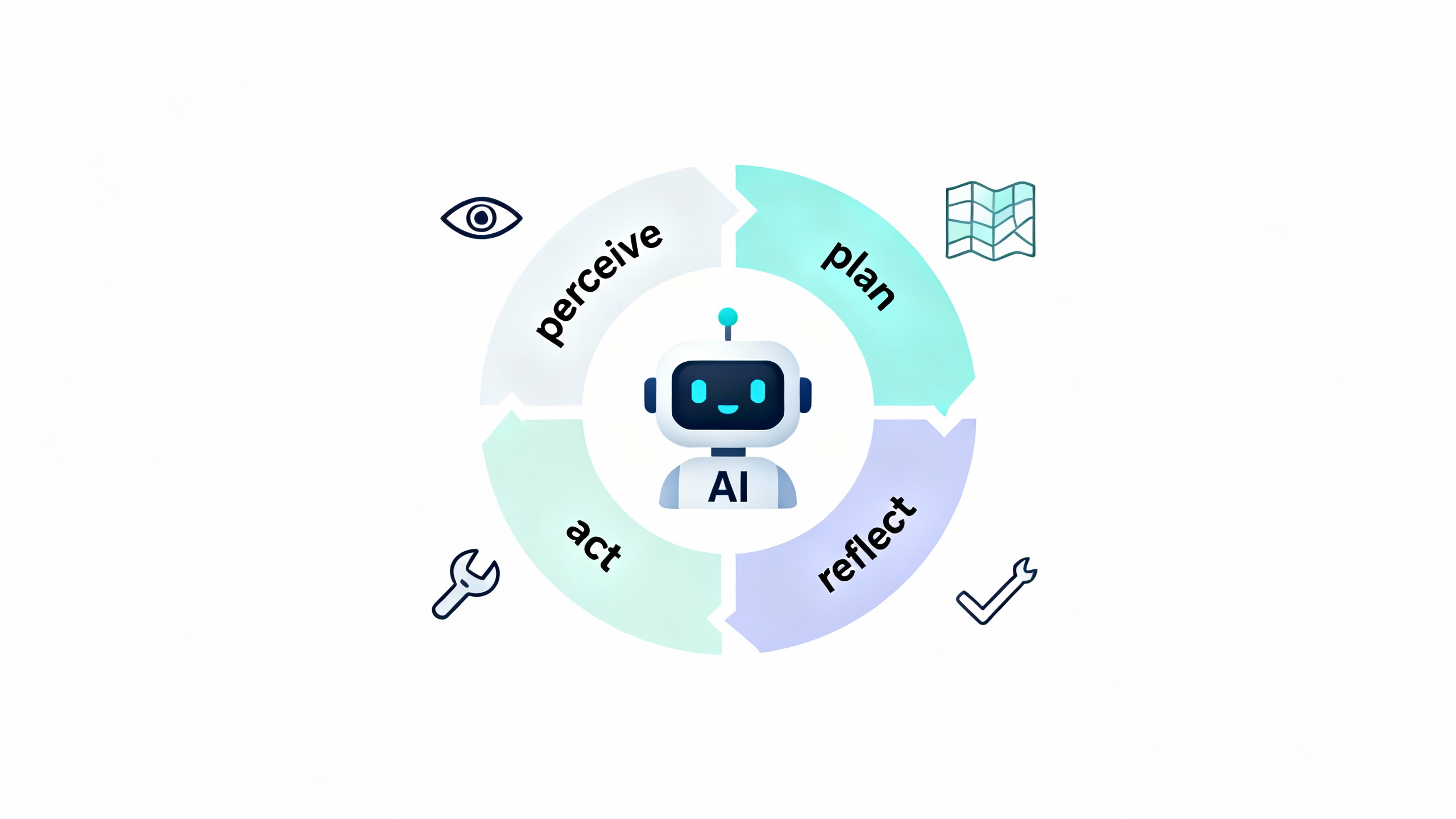

Agentic AI runs a loop. A classic pattern is perceive → plan → act → check → repeat. The agent gathers signals (tickets, emails, records), creates a plan, selects tools (APIs, databases, search, RPA bots), executes, then evaluates results. If the outcome is not good enough, it revises the plan or asks for help. This loop makes agentic systems adaptable, but also less predictable and more in need of guardrails.

-

Key differences at a glance

Automation vs agentic AI at a glance

Dimension Traditional automation Agentic AI Typical tooling Triggering Event-based, scheduled Goal-based with dynamic subgoals Workflow engines, RPA vs. agent frameworks Logic If-then rules, deterministic Reasoning, planning, tool selection Rules engines vs. LLM planners, tool-use APIs Inputs Structured, validated Unstructured and messy (email, docs, chat) ETL, forms vs. retrieval-augmented generation Exception handling Escalate or fail Self-correct, ask clarifying questions, escalate with context Alerts vs. reflection loops Predictability High Moderate; needs constraints Static paths vs. constrained action spaces Auditability Strong (fixed steps) Requires logging and replay of thoughts, tools, and decisions Orchestrators with tracing Risk controls Access controls, approvals Plus prompt-injection defenses, tool whitelists, sandboxing IAM, policy engines, allowlists Cost model Mostly compute and licenses Token usage, tool calls, retries Token budgeting, caching -

Real-world examples you can picture

-

Invoice capture and approvals

- Automation excels: extract from structured PDFs, validate fields, route for approval, post to ERP. When a field is missing, it pauses for a human.

- Agentic AI adds resilience: detect missing tax ID, email the vendor for clarification, parse the reply, update the record, and only escalate if the vendor does not respond.

-

Customer support triage

- Automation excels: route tickets by keyword or form choice, update CRM, send standard acknowledgments.

- Agentic AI boosts resolution: read the ticket, check order status via API, propose a fix, draft a reply, and ask the user for confirmation. If the user says they already tried that, the agent revises its plan and tries step two.

-

Sales operations

- Automation excels: sync leads between systems on a schedule, enforce field formats, trigger playbooks weekly.

- Agentic AI helps with context: enrich accounts from public sites, summarize recent calls, propose next-best-actions, and schedule outreach when signals change.

-

IT service management

- Automation excels: apply known runbooks for routine incidents; reset passwords; rotate keys nightly.

- Agentic AI handles ambiguity: interpret log snippets, form a hypothesis, test it in a sandbox, and write a clear incident timeline for the postmortem.

-

-

When to use what (a simple decision lens)

Use this heuristic:

- If your inputs are consistent and your process rarely changes, prefer automation.

- If your task is fuzzy, requires reading, writing, or adapting, consider agentic AI with safeguards.

- For critical operations, use automation for the core and agentic AI at the edges where flexibility pays off.

TipQuick rule of thumb

If you can write a flowchart with few diamonds, automate it. If your flowchart explodes with exceptions, empower an agent but fence it with approvals, tests, and telemetry.

-

Architecture patterns that work in production

A practical blueprint blends the reliability of workflows with the flexibility of agents:

- Orchestrator at the center: a workflow engine or message bus triggers either a rules path or an agent path.

- Tool gateways: agents never call systems directly; they invoke approved tools with scoped permissions and rate limits.

- Human-in-the-loop: steps marked high risk require review. Present the agent’s plan, evidence, and proposed action in a diff-like view.

- Memory and retrieval: keep a case file so the agent remembers facts across steps; use retrieval rather than giant prompts to control cost.

- Tracing and replay: log plan, tool calls, outputs, and decisions for auditing and post-incident analysis.

- Fallbacks: agents hand off to deterministic automation or humans on timeout, uncertainty spikes, or policy blocks.

Popular building blocks include the OpenAI Assistants API, LangChain agents, Microsoft Semantic Kernel, AWS Agents for Bedrock, and Vertex AI Agents. Each provides tool-use, memory, and orchestration primitives—your job is to add policy, observability, and containment.

-

Governance, safety, and evaluation you will actually use

Good governance borrows from two credible playbooks: the NIST AI Risk Management Framework and the OWASP Top 10 for LLM Applications. Translate those into concrete controls:

- Identity and access: scope tokens, use per-tool allowlists, log every call.

- Content controls: sanitize inputs, strip secrets, and filter outputs for sensitive data before writing anywhere persistent.

- Prompt-injection defenses: never let untrusted text directly set system prompts or tool parameters. Treat external content like code.

- Policy checks: codify what agents may do by data sensitivity, user role, and risk level; integrate approvals where needed.

- Sandbox and test: stage changes, use canary traffic, and include synthetic edge cases.

Measure outcomes like a product team, not a lab experiment:

- Task success rate and time to resolution.

- Human acceptance rate for agent proposals.

- Escalation causes (policy blocks, uncertainty, tool errors) to guide improvements.

- Cost per successful outcome (tokens plus tool calls).

- Safety incidents and near misses with root-cause notes.

-

The next 12 months: what to expect

- Better tool-use and planning: models are getting stronger at multi-step reasoning and parallel tool calls, shrinking cycle times.

- Tighter integration with automation stacks: expect your workflow platform to embed agents for unstructured steps and hand-offs.

- Cheaper context and smarter memory: retrieval and short summaries will reduce token burn while improving reliability.

- On-device and edge agents: lightweight models will handle quick decisions locally with privacy benefits.

- Stronger evaluation playbooks: open benchmarks help, but your domain-specific evals will matter most.

Actionably, design now for observability, fallbacks, and cost controls so you can adopt these improvements without re-architecting.

-

Key takeaways you can act on today

- Automation is unbeatable for stable, repeatable, auditable processes. Start there for core systems.

- Agentic AI shines where ambiguity and unstructured data dominate. Use it at the edges with clear guardrails.

- The best results come from layering: workflows call agents for fuzzy steps; agents call workflows for irreversible ones.

- Measure everything in production terms: success rate, cost per outcome, human acceptance, and safety incidents.

- Follow reputable guidance for risk: NIST AI RMF and OWASP LLM Top 10 protect you from the classics.

- Start small, keep logs, review failures weekly, and only then widen the agent’s autonomy.