-

The short answer

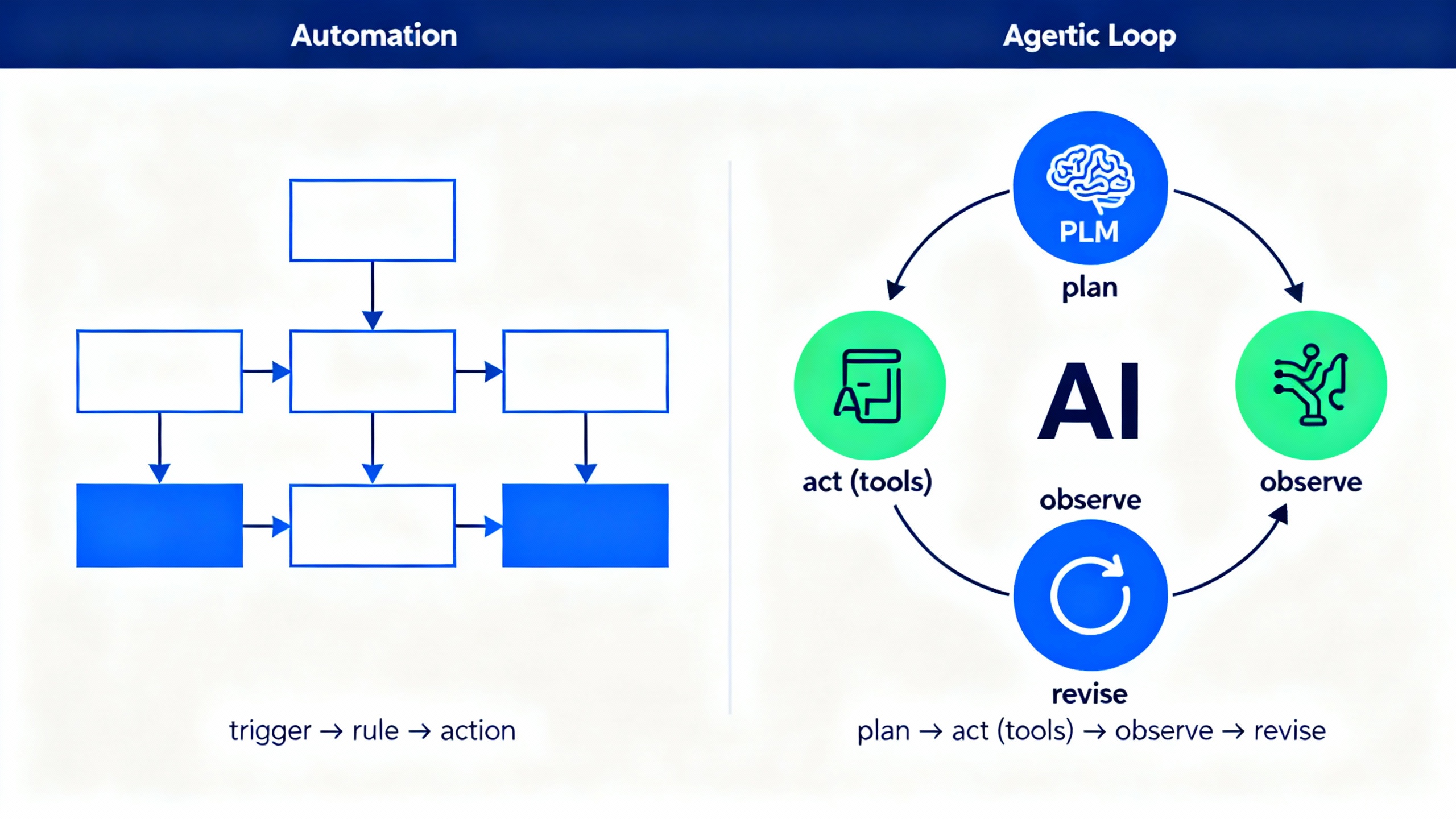

Automation executes predefined, deterministic steps to achieve a known outcome. Think of it as a reliable assembly line: if A happens, do B, then C—every time. Agentic AI, by contrast, is goal-seeking. It uses large language models (LLMs) to reason, plan, choose tools, and adapt its next move based on feedback. You give the agent a destination; it figures out the route, and if the route changes, it finds another way.

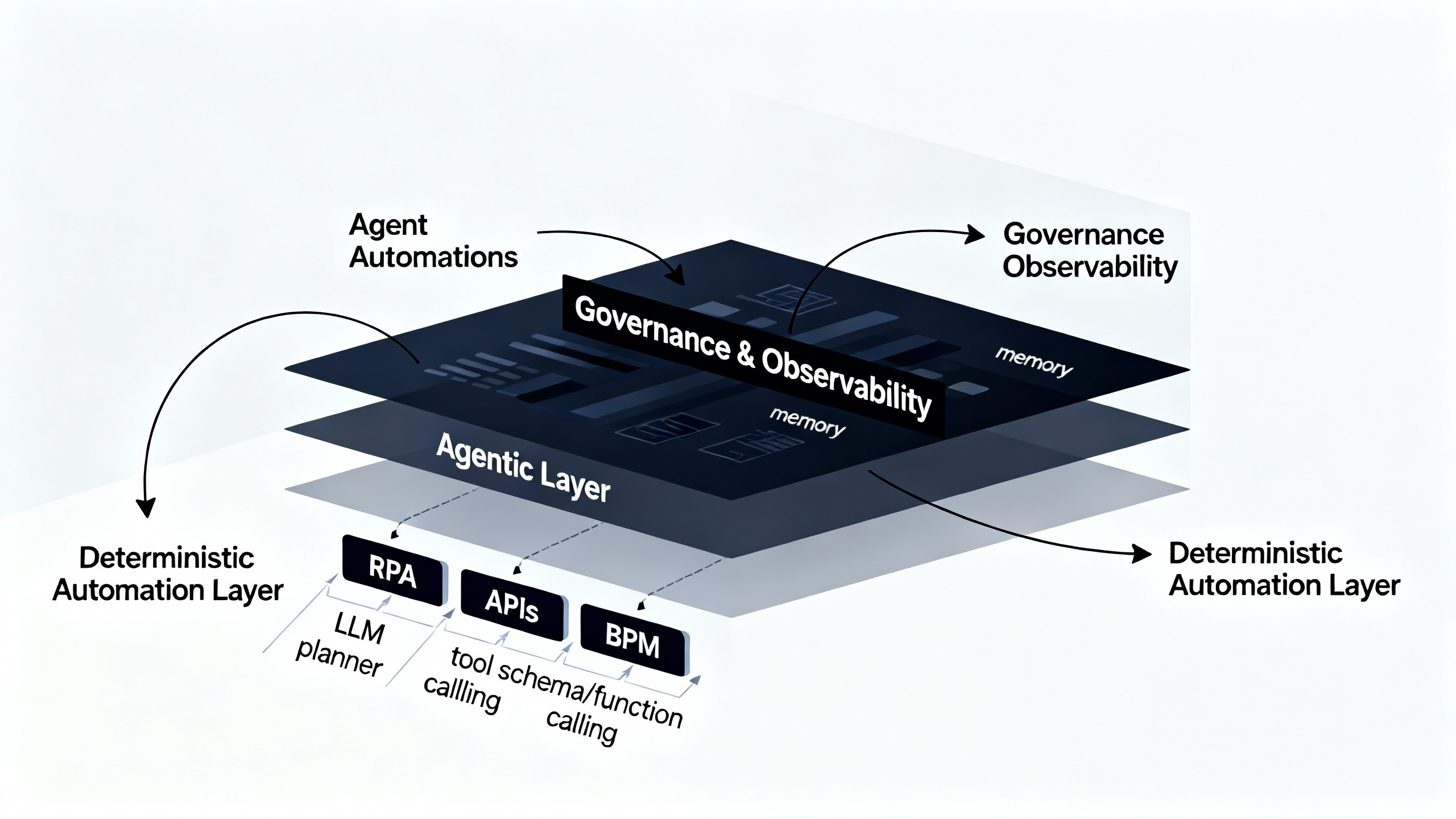

That flexibility is powerful—but it also means outcomes can vary, so you’ll add controls and monitoring. In practice, many teams get the best results by layering the two: automation for stable, high-volume tasks; agents for messy, variable ones; and a clear handoff between them.

-

What is automation?

Automation is the orchestration of repeatable steps triggered by events or schedules. It’s defined by predictable inputs, explicit rules, and guaranteed paths. Common forms include:

- Robotic Process Automation (RPA) for UI-level tasks (e.g., UiPath or Power Automate Desktop).

- Workflow engines and iPaaS platforms for API-first integrations and approvals (e.g., Power Automate Cloud, Zapier, Make).

- Business Process Management (BPMN) systems that model multi-step processes, SLAs, and escalations.

Strengths: high reliability, clear auditability, straightforward compliance, and predictable cost. Weaknesses: brittleness when UIs or schemas change, and limited ability to handle ambiguity (e.g., “interpret this unusual customer email”). When the world is stable, automation is a machine that hums; when the world gets weird, it needs a human—or an agent.

-

What is agentic AI?

Agentic AI is a software approach where an LLM operates in a loop: understand the goal, plan a step, call a tool (API, search, database, RPA bot), observe the result, then decide what to do next. This loop—often called “plan-act-observe”—can be implemented with patterns like ReAct, graph-based controllers like LangGraph, or multi-agent frameworks such as Microsoft AutoGen.

Agents excel when tasks are underspecified, variable, or require synthesis across fuzzy inputs: drafting nuanced messages, triaging tickets, reconciling imperfect data, or sequencing several different tools on the fly. They can also call deterministic automations—using, for example, function calling to trigger a precise API workflow.

The trade-off: agents are non-deterministic. They’re sensitive to context and can make poor choices without guardrails. Good agentic design emphasizes tool constraints, schemas, memory boundaries, and evaluation.

-

Key differences at a glance

Automation vs Agentic AI

Dimension Automation Agentic AI Trigger Event, schedule, or explicit rule Goal or instruction, then self-directed steps Inputs Structured, validated Unstructured + structured; uses language understanding Decision-making Deterministic rules/flows LLM reasoning/planning with tools Adaptability Low (brittle to change) High (replans, chooses tools) Observability Logs of defined steps Reasoning traces, tool call logs, outcomes Governance Traditional change control, SOPs AI risk controls, evals, sandboxing, human-in-the-loop Typical ROI timeline Fast for stable, high-volume tasks Fast in ambiguous workflows; requires guardrails to scale Best for High-volume, repeatable processes Messy, variable, cross-tool synthesis -

When to use which (and how to combine them)

Use automation when:

- The path is known: steps rarely change, SLAs are strict.

- Inputs are structured and validated upstream.

- Compliance requires repeatability and traceability with minimal variance.

Use agentic AI when:

- Inputs are messy (emails, PDFs, chats) and require interpretation.

- The process has branching ambiguity (exceptions, incomplete data).

- The solution requires flexible tool sequencing or research-like work.

Use both together:

- Agent over automation: the agent interprets the goal, then delegates atomic steps to robust automations (APIs, RPA) and checks outcomes.

- Automation with an AI step: keep the existing workflow, but add an LLM for a “judgment” step (classification, extraction, drafting), with confidence thresholds to route to human review.

- Human in the loop: the agent proposes; a person approves; a deterministic automation executes. This reduces error while preserving speed.

-

How they work under the hood

Automation typically looks like a state machine or BPMN diagram. Each node uses a connector (API, UI automation) with expected inputs/outputs. Errors are handled with retries, compensations, or fallbacks. Observability is about job status, task duration, and error codes.

Agentic systems add a reasoning engine. An LLM receives a goal and tool schema, then iteratively plans steps. It can:

- Call tools via schemas (function calling) to fetch data or take actions.

- Use memory (ephemeral or vector) to keep context across steps.

- Reflect and revise plans when observations don’t match expectations.

Reliability comes from constraints: schema validation, output parsers, tool whitelists, step limits, evaluation tests, and strong observability (prompt + tool call logs). For orchestration, many teams use graph controllers (e.g., LangGraph) or workflow engines with agentic nodes.

-

Risks and governance that actually work

Automation risks center on breakage (changed UIs/APIs) and silent failures (bad mappings). Agentic AI adds model risks: hallucination, overreach (doing something it shouldn’t), and subtle bias or data leakage. Good governance blends traditional ops with AI-specific controls.

Practical guardrails:

- Principle of least privilege for tools and data; scoped API keys and role-based access.

- Hard limits on steps, cost, and action types per goal.

- Confidence thresholds and routing: low confidence → human review.

- Red-teaming prompts and tools; adversarial tests in staging.

- Continuous evaluation suites (accuracy, adherence to policy, cost-to-value).

- Policy alignment with the NIST AI Risk Management Framework and ISO/IEC 42001.

TipGuardrails to add now

- Force all writes through deterministic automations; let the agent read and propose.

- Require structured outputs (JSON schemas) and validate every field.

- Log every prompt, tool call, and decision for post-hoc audits.

-

ROI, cost, and how to measure value

Automation ROI is straightforward: time saved per run × run frequency, error reduction, and avoidance of rework. Agentic ROI adds qualitative benefits like better CX or faster resolution of edge cases. Track both with a shared scorecard:

- Efficiency: cycle time, touches per case, cost per transaction.

- Quality: first-pass yield, error rate, human override rate.

- Reliability: incident count, MTTR, drift in model behavior.

- Financials: cost-to-serve, savings vs. baseline, incremental revenue.

For agents, also watch: tool success rate, plan depth (avg steps), and containment (percent resolved without escalation). Tie goals to OKRs—e.g., “Reduce ticket backlog by 30% while keeping override rate below 5%.”

-

A practical 90-day implementation playbook

- Days 0–15: Identify 2–3 candidate processes. Map inputs, failure modes, and compliance constraints. Define success metrics and guardrails.

- Days 16–30: Automate the stable spine (APIs, RPA). Add observability from day one. Build stubs for risky actions.

- Days 31–60: Introduce an agentic layer for the ambiguous bits—classification, drafting, exception handling. Gate risky actions behind approvals.

- Days 61–90: Expand test coverage, add evaluation suites, and pilot with a small user group. Tune prompts, tool schemas, and thresholds. Document outcomes and decide to scale, refine, or retire.

-

Real-world scenarios (side-by-side)

- Invoice processing: Use automation to ingest PDFs, extract via OCR, validate totals against ERP, and post entries. Add an agent to interpret weird invoices, request missing info, or reconcile vendor name variations before the deterministic posting step.

- IT help desk: Automate ticket routing and password resets. Let an agent triage free-form requests, ask clarifying questions, check the knowledge base, and draft a response. High-risk actions (e.g., account deprovisioning) must flow back to a deterministic runbook with approval.

- Sales outreach: Automate list building and CRM hygiene. Use an agent to research accounts, tailor outreach, and schedule follow-ups—while enforcing templates, tone rules, and a daily send cap.

The pattern is consistent: automation does the safe, repeatable “muscle”; the agent brings “judgment,” then hands execution back to deterministic muscles when the stakes are high.

-

The bottom line

Automation and agentic AI aren’t rivals; they’re complementary gears. Automation gives you speed and certainty. Agents give you adaptability and leverage. Design them to work together—from architecture and governance to metrics—and you’ll get systems that are both efficient and resilient. The winning strategy is simple: automate the known, agent the unknown, and connect them with guardrails you can trust.